opencv中有现成的边缘检测和线检测算法,也有对应的gpu实现。但没有曲线检测的算法。下面就实现一个基于Gabor滤波的曲线检测算法,并使用gpu来提高运算速度。算法参考了GitHub上的代码,通过控制Gabor滤波的参数来控制检测的线宽等特征,并使用了FFT来代替卷积从而提升速度。

1.算法来源

算法参考了https://github.com/Po-Ting-lin/HairRemoval里面的代码。

2.算法流程

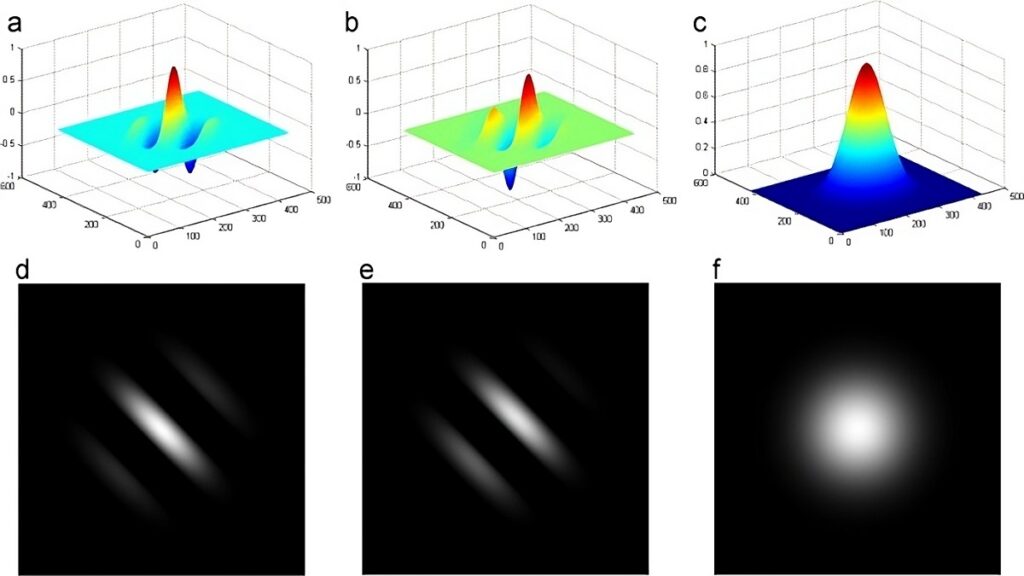

- 根据预输入的参数,生成Gabor滤波矩阵。不同方向生成的矩阵不一样,最多8个方向。

- 将各个Gabor滤波矩阵与原图片进行卷积。为了加快运行速度,使用了fft来加速卷积的运算速度

- 取卷积后的图片各方向的最大值组成最后的结果输出

- 根据实际进行2值化等处理,输出检测出的曲线

3.代码

头文件 DetectLineCUDA.h 的主要部分。关键是构造函数,输入了Gabor滤波的参数,可以用来调整检测曲线的宽带,长度等。

class DetectLineCUDA

{

public:

DetectLineCUDA(int cols, int rows, std::vector<int> _angles,int MinArea = 20,float Alpha = 1.15f,float Beta = 0.55f,float HairWidth = 12.0f);

void detect(cv::cuda::GpuMat& src, cv::cuda::GpuMat& mask);

~DetectLineCUDA();

private:

.............

};

#endif // DETECTLINECUDA_H

- 构造函数初始化了一些变量

- detect函数是算法运行的主函数,通过_initGaborFilterCube函数生成滤波矩阵,随后通过一个循环进行快速傅里叶,计算各个方向上的卷积。最后通过_cubeReduction函数合并结果

DetectLineCUDA::DetectLineCUDA(int cols, int rows, std::vector<int> _angles, int MinArea, float Alpha, float Beta, float HairWidth)

{

Width = cols;

Height = rows;

NumberOfFilter = 8;

this->MinArea = MinArea;

this->Alpha = Alpha;

this->Beta = Beta;

this->HairWidth = HairWidth;

SigmaX = 8.0f * (sqrt(2.0 * log(2) / CV_PI)) * HairWidth / Alpha / Beta / CV_PI;

SigmaY = 0.8f * SigmaX;

KernelRadius = ceil(3.0f * SigmaX); // sigmaX > sigamY

KernelW = 2 * KernelRadius + 1;

KernelH = 2 * KernelRadius + 1;

KernelX = KernelRadius;

KernelY = KernelRadius;

FFTH = snapTransformSize(Height + KernelH - 1);

FFTW = snapTransformSize(Width + KernelW - 1);

cufftPlan2d(&_fftPlanFwd, FFTH, FFTW, CUFFT_R2C);

cufftPlan2d(&_fftPlanInv, FFTH, FFTW, CUFFT_C2R);

this->angles.insert(this->angles.end(), _angles.begin(), _angles.end());

}

int DetectLineCUDA::snapTransformSize(int dataSize) {

........

}

........

void DetectLineCUDA::detect(cv::cuda::GpuMat& src, cv::cuda::GpuMat& mask) {

float* d_PaddedData;

float* d_Kernel;

float* d_PaddedKernel;

float* d_DepthResult;

........

//// init data

float* h_kernels = _initGaborFilterCube();

cudaMemcpy(d_Kernel, h_kernels, KernelH * KernelW * NumberOfFilter * sizeof(float), cudaMemcpyHostToDevice);

cuda::GpuMat src_f;

if (src.channels() == 3) {

cuda::GpuMat src_gray;

src.convertTo(src_gray, CV_32FC3);

cuda::cvtColor(src_gray, src_f,cv::COLOR_BGR2GRAY);

}

else {

src.convertTo(src_f, CV_32F);

}

cuda::GpuMat d_src_c_ptr_mat(src.rows, src.cols, CV_32FC1, d_src_c_ptr);

cuda::GpuMat pand_mat(FFTH, FFTW, CV_32FC1, d_PaddedData);

cuda::copyMakeBorder(src_f, d_src_c_ptr_mat, 0, FFTH - src.rows, 0, FFTW - src.cols, BORDER_WRAP);

d_src_c_ptr_mat.copyTo(pand_mat);

// FFT data

cufftExecR2C(_fftPlanFwd, (cufftReal*)d_PaddedData, d_DataSpectrum);

cudaDeviceSynchronize();

for (int i = 0; i < angles.size(); i++) {

int kernel_offset = angles[i] * KernelH * KernelW;

int data_offset = i * FFTH * FFTW;

_padKernel(d_PaddedKernel, d_Kernel + kernel_offset);

cv::cuda::GpuMat raw_dst_mat(FFTH, FFTW, CV_32FC1, d_PaddedKernel);

cv::Mat cpumat;

raw_dst_mat.download(cpumat);

// FFT kernel

cufftExecR2C(_fftPlanFwd, (cufftReal*)d_PaddedKernel, (cufftComplex*)d_KernelSpectrum);

cudaDeviceSynchronize();

//// mul

_modulateAndNormalize(d_TempSpectrum, d_DataSpectrum, d_KernelSpectrum, 1);

cufftExecC2R(_fftPlanInv, (cufftComplex*)d_TempSpectrum, (cufftReal*)(&d_DepthResult[data_offset]));

cudaDeviceSynchronize();

cv::cuda::GpuMat d_DepthResult_mat(FFTH, FFTW, CV_32FC1, d_DepthResult + data_offset);

d_DepthResult_mat.download(cpumat);

}

_cubeReduction(d_DepthResult, d_Result);

cuda::GpuMat d_Result_mat(Height , Width,CV_8UC1, d_Result);

d_Result_mat.copyTo(mask);

.........

}

float* DetectLineCUDA::_initGaborFilterCube() {

float* output = new float[KernelW * KernelH * NumberOfFilter];

float* output_ptr = output;

for (int curNum = 0; curNum < NumberOfFilter; curNum++) {

float theta = (float)CV_PI / NumberOfFilter * curNum;

for (int y = -KernelRadius; y < KernelRadius + 1; y++) {

for (int x = -KernelRadius; x < KernelRadius + 1; x++, output_ptr++) {

float xx = x;

float yy = y;

float xp = xx * cos(theta) + yy * sin(theta);

float yp = yy * cos(theta) - xx * sin(theta);

*output_ptr = exp((float)(-CV_PI) * (xp * xp / SigmaX / SigmaX + yp * yp / SigmaY / SigmaY)) * cos((float)CV_2PI * Beta / HairWidth * xp + (float)CV_PI);

}

}

}

return output;

}cuda 文件 DetectLinekernel.cu

里面的一些函数可以使用opencv里的函数代替,但感觉意义不大,就直接使用原来的了。

函数调用的方法

Mat inputmat = imread("test.bmp",cv::IMREAD_GRAYSCALE);

cv::bitwise_not(inputmat,inputmat);//算法找寻的是黑色的曲线,所以将原始图片取反

cuda::GpuMat inputgpu;

inputgpu.upload(inputmat);

std::vector<int> angles;

angles.push_back(ANGLE_0);

angles.push_back(ANGLE_23);

angles.push_back(ANGLE_45);

angles.push_back(ANGLE_68);

angles.push_back(ANGLE_90);

angles.push_back(ANGLE_113);

angles.push_back(ANGLE_135);

angles.push_back(ANGLE_157);

DetectLineCUDA dl(inputmat.cols, inputmat.rows,angles,20,1.95f,0.85f,8.0f);

cv::cuda::GpuMat m_g = cuda::createContinuous(inputmat.size(), CV_8UC1);

dl.detect(inputgpu, m_g);

Mat m;

m_g.download(m);

imwrite("m.bmp", m);// m 就是分析出的曲线,后续可以再进行2值化,去掉一些干扰算法效果